반응형

Functional API

- Sequential API는 여러층을 공유하거나 다양한 종류의 입력과 출력을 사용하는 등의 복잡한 모델을 만드는 일에는 한계가 있어더욱 복잡한 모델을 생성할 수 있는 방식인 Functional API(함수형 API)를 사용.

- 각 레이어를 함수로서 정의.

- 반드시 입력층을 정의.

▶ 기본 활용문

from tensorflow.keras.layers import Input, Dense, concatenate

from tensorflow.keras.models import Model, load_modelinputs = Input(shape=(10,)) # 입력층

hidden1 = Dense(64, activation='relu')(inputs) # 히든레이어1을 입력층과 연결

hidden2 = Dense(64, activation='relu')(hidden1) # 히든레이어2를 히든레이어1과 연결

output = Dense(1, activation='sigmoid')(hidden2) # 출력층을 히든레이어2와 연결

model = Model(inputs=inputs, outputs=output) # 입력층과 출력층을 저장- Input() 함수에 입력의 크기를 정의합니다.

- 이전층을 다음층 함수의 입력으로 사용하고, 변수에 할당합니다.

- Model() 함수에 입력과 출력을 정의합니다.

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

model.fit(X, y, epochs=5000, verbose=0)

model.predict(X)

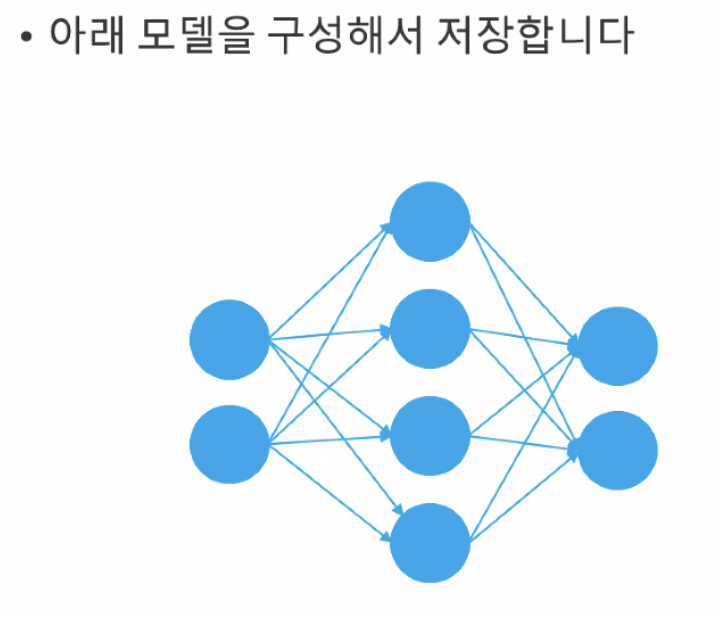

▶ 모델 구현

inputs_1 = Input(shape=(2,))

hidden1_1 = Dense(4, activation='elu')(inputs_1)

output_1 = Dense(2, activation='sigmoid')(hidden1_1)

model_1 = Model(inputs=inputs_1, outputs=output_1)model_1.summary()

# Model: "model_9"

# _________________________________________________________________

# Layer (type) Output Shape Param #

# =================================================================

# input_10 (InputLayer) [(None, 2)] 0

# dense_21 (Dense) (None, 4) 12

# dense_22 (Dense) (None, 2) 10

# =================================================================

# Total params: 22

# Trainable params: 22

# Non-trainable params: 0

# _________________________________________________________________

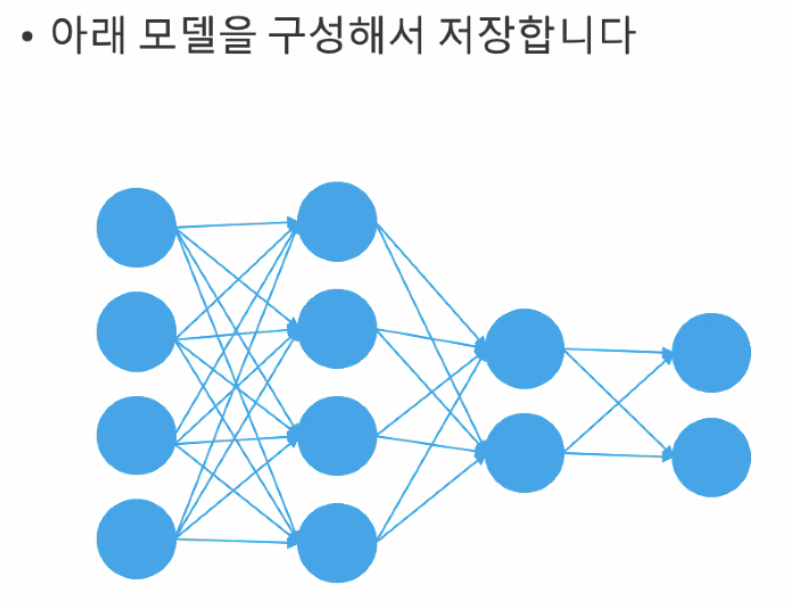

inputs_2 = Input(shape=(4,))

hidden2_1 = Dense(4, activation='elu')(inputs_2)

hidden2_2 = Dense(2, activation='elu')(hidden2_1)

output_2 = Dense(2, activation='sigmoid')(hidden2_2)

model_2 = Model(inputs=inputs_2, outputs=output_2)model_2.summary()

# Model: "model_10"

# _________________________________________________________________

# Layer (type) Output Shape Param #

# =================================================================

# input_11 (InputLayer) [(None, 4)] 0

# dense_23 (Dense) (None, 4) 20

# dense_24 (Dense) (None, 2) 10

# dense_25 (Dense) (None, 2) 6

# =================================================================

# Total params: 36

# Trainable params: 36

# Non-trainable params: 0

# _________________________________________________________________

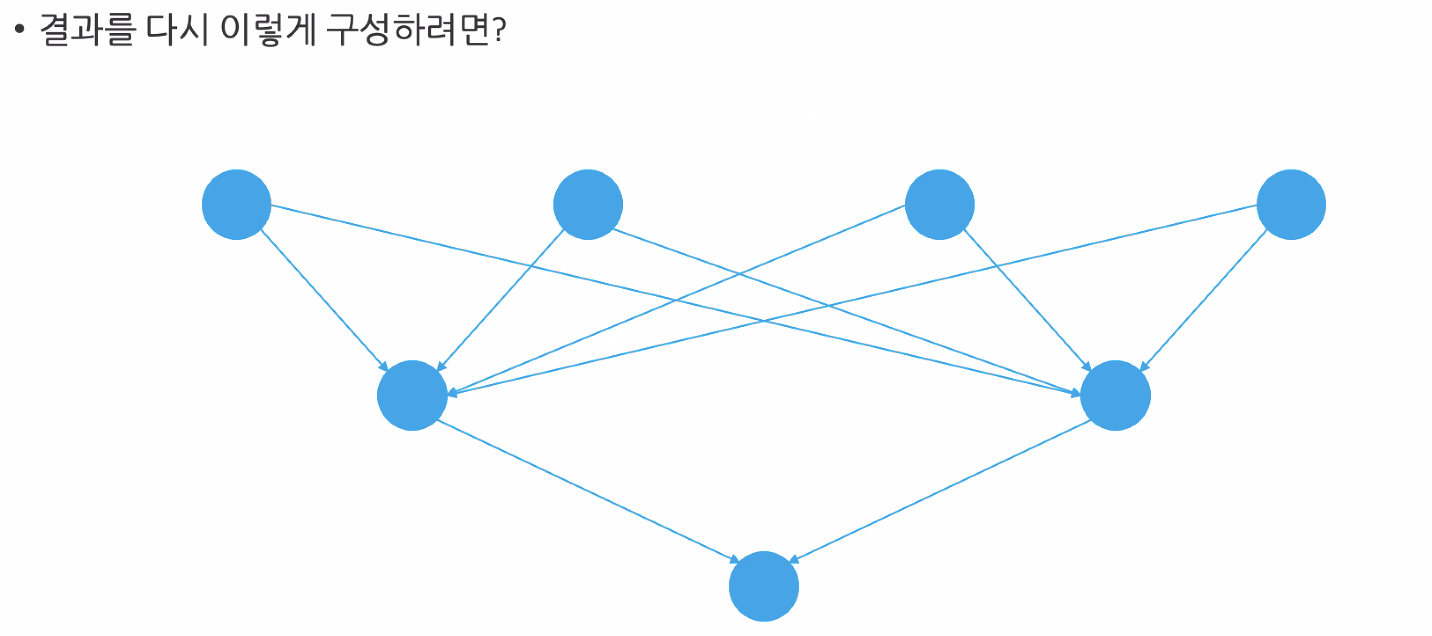

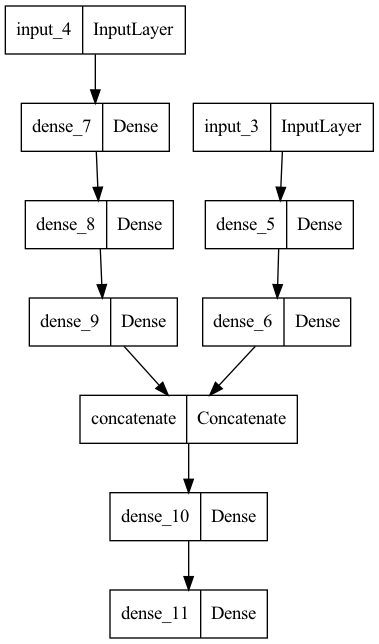

▷ 다중 입력 모델(model that accepts multiple inputs)

inputs_1 = Input(shape=(2,))

hidden1_1 = Dense(4, activation='elu')(inputs_1)

output_1 = Dense(2, activation='sigmoid')(hidden1_1)

model_1 = Model(inputs=inputs_1, outputs=output_1)

inputs_2 = Input(shape=(4,))

hidden2_1 = Dense(4, activation='elu')(inputs_2)

hidden2_2 = Dense(2, activation='elu')(hidden2_1)

output_2 = Dense(2, activation='sigmoid')(hidden2_2)

model_2 = Model(inputs=inputs_2, outputs=output_2)

# 두개의 인공 신경망의 출력을 연결(concatenate)

result = concatenate([model_1.output, model_2.output])

z = Dense(2, activation="relu")(result)

z = Dense(1, activation="linear")(z)

model_A = Model(inputs=[model_1.input, model_2.input], outputs=z)

▶ save_weigths, load_weights

model.save_weights('파일경로')model.load_weights('파일경로')

▶ plot_model

!pip install pydot

from tensorflow.keras.utils import plot_model

plot_model(model_2)

plot_model(model_A)

▶ xavier initialization

- 2015년까지 사용된 일반적인 딥러닝 프레임 초기화 기법.

- 활성화 함수가 선형인 것을 전제로 이끈 결과.

- simoid, tanh 함수는 좌우 대칭임으로 중앙 부근이 선형함수라고 볼 수 있고 Xavier 초깃값이 적당하다.

- ReLU를 사용시 He 초깃값 사용을 권장한다.

w = np.random.randn(node_num, node_num) / np.sqrt(node_ num)

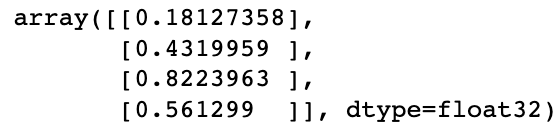

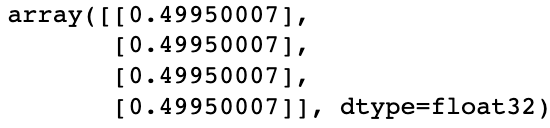

▷ no xavier

xor = {'x1':[0,0,1,1], 'x2':[0,1,0,1], 'y':[0,1,1,0]}

XOR = pd.DataFrame(xor)

X = XOR.drop('y', axis=1)

y = XOR.y

ip = Input(shape=(2,))a

n = Dense(2, activation='sigmoid')(ip)

n = Dense(1, activation='linear')(n)

model = Model(inputs=ip, outputs=n)

model.compile(loss='mse', optimizer='rmsprop')

model.fit(X, y, epochs=1400, verbose=0)

model.predict(X)

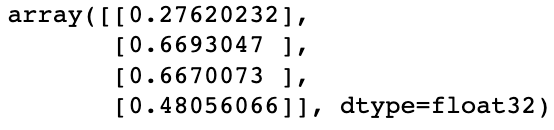

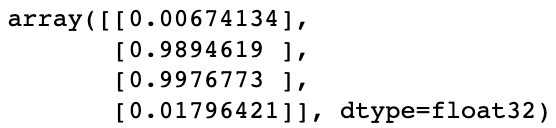

▷ with xavier initialization

xor = {'x1':[0,0,1,1], 'x2':[0,1,0,1], 'y':[0,1,1,0]}

XOR = pd.DataFrame(xor)

X = XOR.drop('y', axis=1)

y = XOR.y

ip = Input(shape=(2,))

n = Dense(2, activation='sigmoid')(ip)

n = Dense(1, activation='linear')(n)

model = Model(inputs=ip, outputs=n)

weights = model.get_weights()

xavier_w12 = np.random.randn(2, 2) / np.sqrt(2)

xavier_w21 = np.random.randn(2, 1) / np.sqrt(2)

xavier_weights = weights

xavier_weights[0] = xavier_w12

xavier_weights[2] = xavier_w21

model.set_weights(xavier_weights)

model.compile(loss='mse', optimizer='rmsprop')

model.fit(X, y, epochs=1400, verbose=0)

model.predict(X)

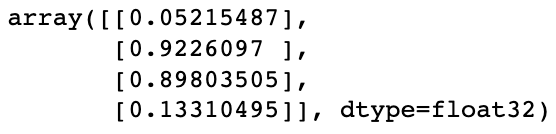

▷ with xavier initialization & tanh

xor = {'x1':[0,0,1,1], 'x2':[0,1,0,1], 'y':[0,1,1,0]}

XOR = pd.DataFrame(xor)

X = XOR.drop('y', axis=1)

y = XOR.y

ip = Input(shape=(2,))

n = Dense(2, activation='tanh')(ip)

n = Dense(1, activation='linear')(n)

model = Model(inputs=ip, outputs=n)

weights = model.get_weights()

xavier_w12 = np.random.randn(2, 2) / np.sqrt(2)

xavier_w21 = np.random.randn(2, 1) / np.sqrt(2)

xavier_weights = weights

xavier_weights[0] = xavier_w12

xavier_weights[2] = xavier_w21

model.set_weights(xavier_weights)

model.compile(loss='mse', optimizer='rmsprop')

model.fit(X, y, epochs=1400, verbose=0)model.predict(X)

▷ with xavier initialization & relu

xor = {'x1':[0,0,1,1], 'x2':[0,1,0,1], 'y':[0,1,1,0]}

XOR = pd.DataFrame(xor)

X = XOR.drop('y', axis=1)

y = XOR.y

ip = Input(shape=(2,))

n = Dense(2, activation='relu')(ip)

n = Dense(1, activation='linear')(n)

model = Model(inputs=ip, outputs=n)

weights = model.get_weights()

xavier_w12 = np.random.randn(2, 2) / np.sqrt(2)

xavier_w21 = np.random.randn(2, 1) / np.sqrt(2)

xavier_weights = weights

xavier_weights[0] = xavier_w12

xavier_weights[2] = xavier_w21

model.set_weights(xavier_weights)

model.compile(loss='mse', optimizer='rmsprop')

model.fit(X, y, epochs=1400, verbose=0)

model.predict(X)

▷ with xavier initialization & selu

xor = {'x1':[0,0,1,1], 'x2':[0,1,0,1], 'y':[0,1,1,0]}

XOR = pd.DataFrame(xor)

X = XOR.drop('y', axis=1)

y = XOR.y

ip = Input(shape=(2,))

n = Dense(2, activation='selu')(ip)

n = Dense(1, activation='linear')(n)

model = Model(inputs=ip, outputs=n)

weights = model.get_weights()

xavier_w12 = np.random.randn(2, 2) / np.sqrt(2)

xavier_w21 = np.random.randn(2, 1) / np.sqrt(2)

xavier_weights = weights

xavier_weights[0] = xavier_w12

xavier_weights[2] = xavier_w21

model.set_weights(xavier_weights)

model.compile(loss='mse', optimizer='rmsprop')

model.fit(X, y, epochs=1400, verbose=0)

model.predict(X)

반응형

'Python' 카테고리의 다른 글

| [Python] Deep Learning (0) | 2022.11.03 |

|---|---|

| [Python] Keras.initailizers(초기화 함수) (0) | 2022.10.31 |

| [Python] 빅데이터 분석 4 : 평가 및 적용(Evaluation & Application) (0) | 2022.10.23 |

| [Python] Coding Test (팩토리얼) (0) | 2022.10.13 |

| [Python] Coding Test (이진수 변환) (0) | 2022.10.13 |